Key Takeaways

- The concept of a computer dates back to Charles Babbage's mechanical difference and analytical engines, but the first electronic computer was the brainchild of Dr. John Vincent Atanasoff and his graduate student Clifford Berry, resulting in the Atanasoff-Berry Computer (ABC) by 1942.

- World War II accelerated computer development, leading to machines like ENIAC for artillery calculations and Colossus for code-breaking; by 1951, the first commercial computer, UNIVAC, was built for the U.S. Census Bureau.

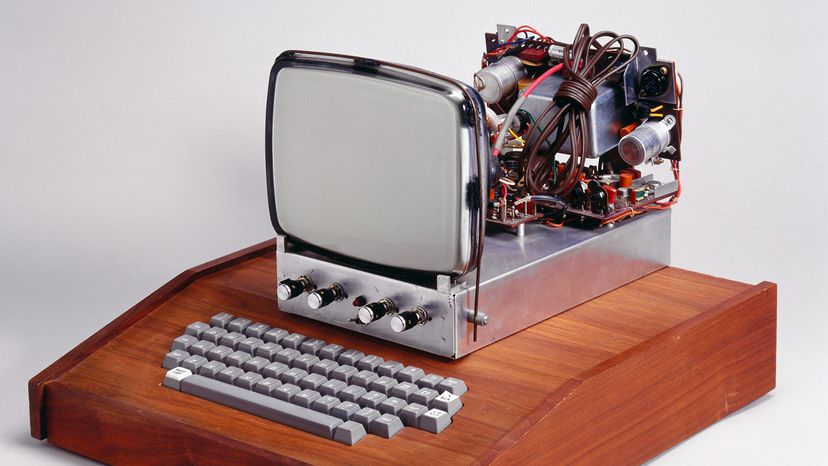

- The evolution of personal computers began with prototypes like Hewlett-Packard's HP 9100A scientific calculator, Apple's Apple I and Apple II, and culminated in IBM's 5150 Personal Computer in 1981, which became a staple in businesses globally.

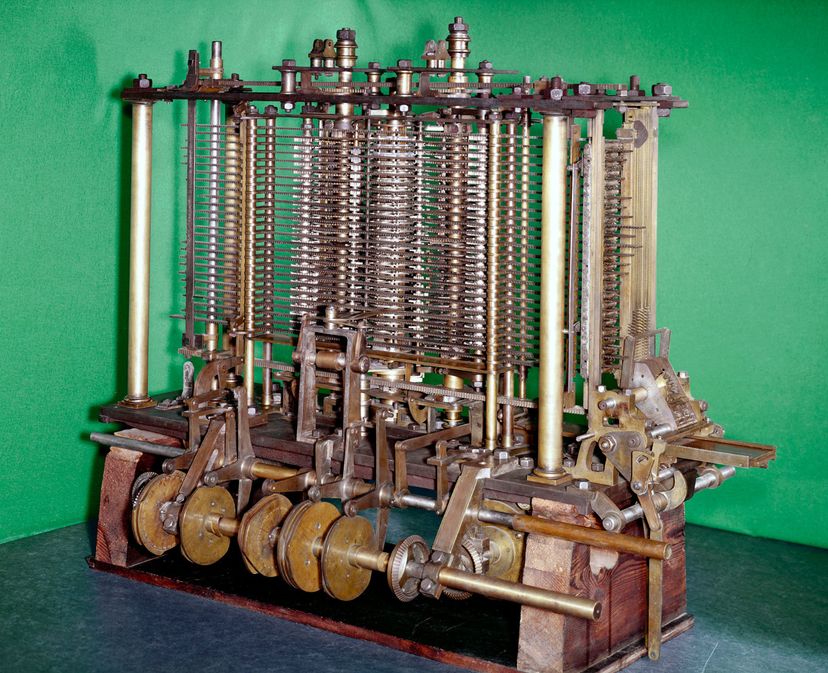

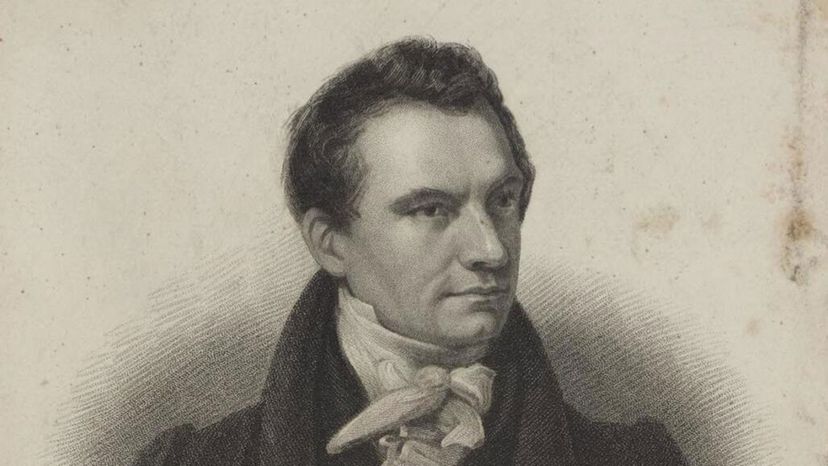

We could argue that the first computer was the abacus or its descendant, the slide rule, invented by William Oughtred in 1622. But many people consider English mathematician Charles Babbage's analytical engine to be the first computer resembling today's modern machines.

Advertisement

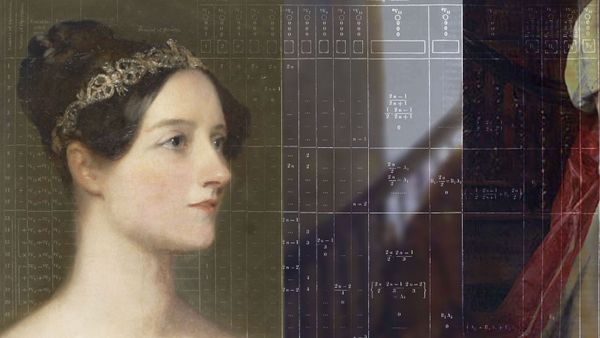

Before Babbage came along, a "computer" was a person, someone who literally sat around all day, adding and subtracting numbers and entering the results into tables. The tables then appeared in books, so other people could use them to complete tasks, such as launching artillery shells accurately or calculating taxes.

In fact, Babbage wrote that he was daydreaming over logarithmic tables during his time at Cambridge, sometime around 1812-1813, when he first imagined that a machine could do the job of a human computer. In July 1822, Babbage wrote a letter to the Royal Society proposing the idea that machines could do calculations based on a "method of differences." The Royal Society was intrigued and agreed to fund development on the idea. The first machine design that came out of these efforts was Babbage's first difference engine.

It was, in fact, a mammoth number-crunching project that inspired Babbage in the first place. In 1792 the French government had appointed Gaspard de Prony to supervise the creation of the Cadastre, a set of logarithmic and trigonometric tables. The French wanted to standardize measurements in the country and planned to use the tables to aid in those efforts to convert to the metric system. De Prony was in turn inspired by Adam Smith's famous work "Wealth of Nations." Smith wrote about how the division of labor improved efficiency when manufacturing pins. De Prony wanted to apply the division of labor to his mathematical project.

Unfortunately, once the 18 volumes of tables – with one more describing mathematical procedures – were complete, they were never published.

In 1819, Babbage visited the City of Light and viewed the unpublished manuscript with page after page of tables. If only, he wondered, there was a way to produce such tables faster, with less manpower and fewer mistakes. He thought of the many marvels generated by the Industrial Revolution. If creative and hardworking inventors could develop the cotton gin and the steam locomotive, then why not a machine to make calculations?

Babbage returned to England and decided to build just such a machine. His first vision was something he dubbed the difference engine, which worked on the principle of finite differences, or making complex mathematical calculations by repeated addition without using multiplication or division. He secured 1,500 pounds from the English government in 1823 and hired engineer Joseph Clement to begin construction on the difference engine.

Clement was a well-respected engineer and suggested improvements to Babbage, who allowed Clement to implement some of his ideas. Unfortunately, in 1833 the two had a falling out over the terms of their arrangement. Clement quit, ending his work on the difference engine.

But, as you might have guessed, the story doesn't end there.

Advertisement