Jan. 25, 1979: Robert Williams, a 25-year-old factory worker in a Ford Motor Company casting plant in Flat Rock, Michigan, is asked to scale a massive shelving unit to manually count the parts there. The five-story machine used to retrieve the castings is giving false readings, and it's Williams' job to go up and find out how many there really are.

While Williams is up there, doing the job he's ordered to do, a robot arm also tasked with parts retrieval goes about its work. Soon enough, the robot silently comes upon the young man, striking him in the head and killing him instantly. The robot keeps on working, but Williams lay there dead for 30 minutes before his body would be found by concerned co-workers, according to Knight-Ridder reporting.

Advertisement

On that wintry day, Williams becomes the first human in history to be killed by a robot.

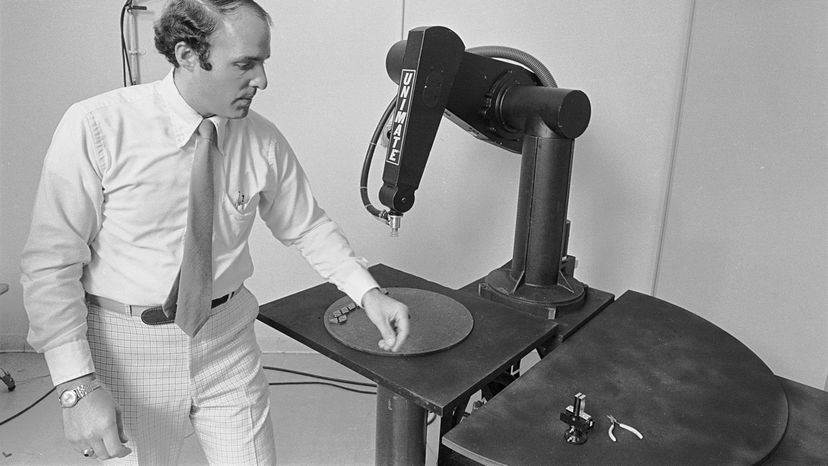

The death, however, was completely unintentional. There simply weren't safeguards in place to protect Williams. No alarms notified him of the approaching arm, and no technology could alter the robot's behavior in the presence of a human. In 1979, the artificial intelligence involved wasn't sophisticated enough to do anything to prevent such a death. A jury agreed that not enough care had been put into the design of the robot to prevent a death like this. Williams' family won a $10 million lawsuit for his wrongful death from Unit Handling Systems, a division of Litton Industries, the manufacturer that designed the robot.

The next death by robot would occur slightly more than two years later, in Japan, under similar circumstances: A robot arm again failed to sense a worker, 37-year-old Kenji Urada, and accidentally pushed him to his death.

In the ensuing years, roboticists, computer scientists and artificial intelligence experts have continued to struggle with the issue of how robots can safely interact with humans without causing them harm.

Decades later, reports of human deaths caused by robots or artificial intelligences feel more commonplace. Uber and Tesla have made the news with reports of their autonomous and self-driving cars, respectively, getting into accidents and killing passengers or striking pedestrians. Though many safeguards now are in place, the problem still hasn't been solved.

None of these deaths are caused by the will of the robot; rather they're all accidental. But there's a worry, fanned by the flames of movies and science fiction stories like the "Terminator" and "Matrix" series, that artificial intelligences could develop a will of their own and, in that development, desire to harm a human.

Shimon Whiteson, associate professor in the department of computer science at the University of Oxford and chief scientist and co-founder of Morpheus Labs, calls this concern the "anthropomorphic fallacy." Whiteson defines the fallacy as "the assumption that a system with human-like intelligence must also have human-like desires, e.g., to survive, be free, have dignity, etc. There's absolutely no reason why this would be the case, as such a system will only have whatever desires we give it."

Value misalignment, he argues, is the greater existential threat, where a gap exists between what a programmer tells a machine to do and what the programmer really meant to happen. "How do you communicate your values to an intelligent system such that the actions it takes fulfill your true intentions? The discrepancy between the two becomes more consequential as the computer becomes more intelligent and autonomous," explains Whiteson.

Instead, Whiteson tells us that the greater threat is scientists purposefully designing robots that can kill human targets without human intervention for military purposes. That's why AI and robotics researchers around the world published an open letter calling for a worldwide ban on such technology. And that's why the United Nations is again meeting in 2018 to discuss if and how to regulate so-called "killer robots." Though these robots wouldn't need to develop a will of their own to kill, they could be programmed to do it.

Advertisement