Being a human is far easier than building a human.

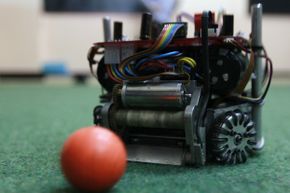

Take something as simple as playing catch with a friend in the front yard. When you break down this activity into the discrete biological functions required to accomplish it, it's not simple at all. You need sensors, transmitters and effectors. You need to calculate how hard to throw based on the distance between you and your companion. You need to account for sun glare, wind speed and nearby distractions. You need to determine how firmly to grip the ball and when to squeeze the mitt during a catch. And you need to be able to process a number of what-if scenarios: What if the ball goes over my head? What if it rolls into the street? What if it crashes through my neighbor's window?

Advertisement

These questions demonstrate some of the most pressing challenges of robotics, and they set the stage for our countdown. We've compiled a list of the 10 hardest things to teach robots ordered roughly from "easiest" to "most difficult" -- 10 things we'll need to conquer if we're ever going to realize the promises made by Bradbury, Dick, Asimov, Clarke and all of the other storytellers who have imagined a world in which machines behave like people.