It happened again. The local weatherman had predicted a major winter storm had set its sights on central Virginia. Precipitation would start the following morning, and we should expect 8 inches (20 centimeters) of the white stuff by evening. The weather service issued a winter storm warning, and red triangles popped up on my desktop and smartphone weather apps. My boys bounced around the house, celebrating the snow day that would soon be commandeered for sledding, loafing and video-game playing.

The next day dawned gray and brooding, and the local weather crew reiterated the previous night's forecast: The storm was still lurching its way northeastward. My boys climbed on the bus, smugly telling me they'd see me at noon, after an early school dismissal. But noon arrived without the slightest hint of precipitation. So did afternoon. When they stepped off the bus at 3:45, my boys glowered at the sky, all the while insisting that the storm had only been delayed. The next day would be a horror of snowdrifts, slick roads and power outages. Even the weatherman clung to the party line: The storm was still coming, although now we might expect just 3 or 4 inches (about 7-10 centimeters). Yet when we finally turned off the lights at 11:30 that evening, not a drop of moisture -- frozen or otherwise -- had fallen from the sullen skies draped over the Shenandoah Valley.

Advertisement

As you've guessed by now, the storm never came. We woke up the next morning to clearing skies and daily routines unaffected by snow. Years ago, we would have scoffed at the meteorologist's inability to make an accurate forecast. How could someone, with so much technology at his or her disposal, so much data to crunch in supercomputers, be wrong so much of the time? How could modern science continue to fail us so miserably?

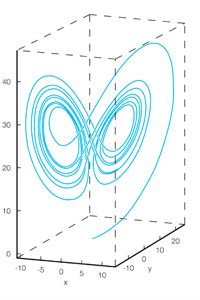

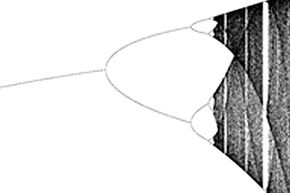

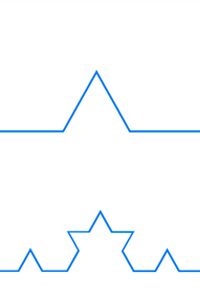

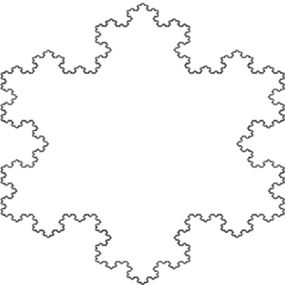

Now we know. The weatherman had fallen victim to chaos. Not the kind of chaos we remember from English class -- complete disorder and confusion. This is a property of highly complex systems, such as the atmosphere, the economy and populations of living things. Indeed, perhaps all systems, even those that seem to conform nicely to scientific laws as solid as bedrock, exhibit chaotic features. If that's true, then everything we know about everything is not necessarily wrong, but different. The ordered, obsequious universe we now take for granted may be the exception to the rule, instead of the other way around. At the very least, our glimpses of order could be byproducts of chaos, brief flashes of structure and form against a backdrop of seething complexity.

But that's getting ahead of the story. To understand chaos, we have to get to know its counterpoint. And that takes us back to the 17th century and some of the biggest names in the history of science.