Different people have different opinions of the nuclear power industry. Some see nuclear power as an important green technology that emits no carbon dioxide while producing huge amounts of reliable electricity. They point to an admirable safety record that spans more than two decades.

Others see nuclear power as an inherently dangerous technology that poses a threat to any community located near a nuclear power plant. They point to accidents like the Three Mile Island incident and the Chernobyl explosion as proof of how badly things can go wrong.

Advertisement

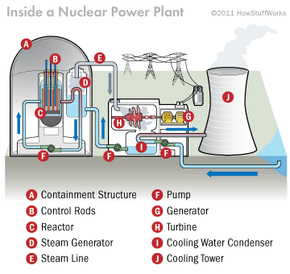

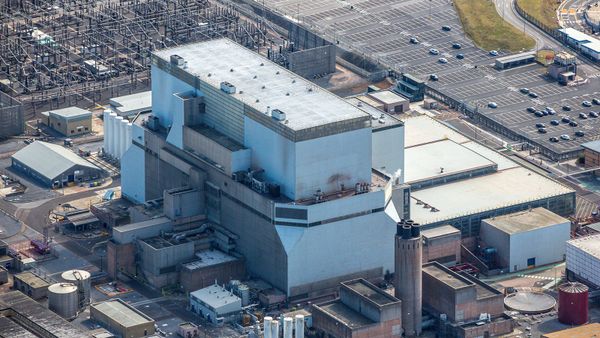

In either case, commercial nuclear reactors are a fact of life in many parts of the developed world. Because they do make use of a radioactive fuel source, these reactors are designed and built to the highest standards of the engineering profession, with the perceived ability to handle nearly anything that nature or mankind can dish out. Earthquakes? No problem. Hurricanes? No problem. Direct strikes by jumbo jets? No problem. Terrorist attacks? No problem. Strength is built in, and layers of redundancy are meant to handle any operational abnormality.

Shortly after an earthquake hit Japan on March 11, 2011, however, those perceptions of safety began rapidly changing. Explosions rocked several different reactors in Japan, even though initial reports indicated that there were no problems from the quake itself. Fires broke out at the Onagawa plant, and there were explosions at the Fukushima Daiichi plant.

So what went wrong? How can such well-designed, highly redundant systems fail so catastrophically? Let's take a look.

Advertisement